The AI, Media and Democracy Lab held its second annual community update meeting on December 10th. In the beautiful Sweelinck room of the Institute for Advanced Studies (our home on Tuesdays), we welcomed our partners and researchers for an afternoon of collaboration and celebration. With this meeting we aimed to update partners about impactful collaborations of the past year and learn what should be on next year’s agenda.

In general, our annual community meetings offer us the opportunity to reflect on the Lab’s achievements, deepen connections with partners, and identify common goals to shape a comprehensive research agenda for the future. This year, we invited our partners to present the topics and challenges addressed in our collaborative projects, placing their perspectives at the heart of the meeting.

The BBC Challenge: Responsible AI Applications

Bronwyn Jones, Translational Fellow at the University of Edinburgh and the BBC, started us off with a recap of the collaborations between the AI Media and Democracy Lab and the BBC Research and Development Responsible Innovation Center. In collaboration with BRAID (Briding Responsible AI Divides), the project aims to translate research into practice and vice versa by building better bridges between research, policymakers, and industry partners.

Our collaboration with BRAID has brought forth two projects in which our researchers Hannes Cools and Anna Schjøtt Hansen were embedded in BBC teams with the goal to uncover responsible ways to include AI technologies into practice. Read about the BBC project and the following academic publications in detail here.

Bronwyn highlighted the productivity of this close collaborative relationship between research and practice. The inclusion of scholars from the humanities, social science, and arts was a valuable addition to address social needs, requirements, and expectations in an environment that was previously dominated by a sole technical focus. The interdisciplinary perspectives that the collaboration with the AI Media and Democracy Lab has contributed are a stronghold in that area. Bronwyn concluded with hopes of continuing this collaboration in the coming years to strengthen the future of democracy and translate big questions with practice. She hopes to share the knowledge from this collaboration with global broadcasters outside of the BBC.

The DPG Media Challenge: Modular Journalism

Mariëlle Hendriks, consumer insight expert at DPG Media, followed up by presenting the central challenges they aim to tackle. In a time in which news are available in various ways, print readers are slowly moving away from the traditional format while digital readers are increasingly avoiding news. In an effort to move print readers online and address digital readers more broadly, DPG Media explores modular journalism in collaboration with our researchers. Modular journalism might be the best solution in a time in which every reader has different preferences and perspectives. A prototype was developed in which users could scroll between different channels and information was presented in alternative ways. A project that introduced focus groups of various demographics and backgrounds with this prototype showed that readers are generally enthusiastic about the prospect of modular journalism and saw opportunities to engage with news in ways they were considering not before.

Mariëlle highlighted the promising input of this project and the possibilities of proceeding with this project in the future. The collaboration is further interesting as it both optimises profit models for DPG Media, but also offers our researchers an in to reflect on the topic of interests users display, as well as their motivations.

The ZWART Challenge: Ethical AI Feedback Bot

Omroep Zwart, the “newest public broadcaster in the world”, was presented by Gianni Lieuw-A-Soe, the director and co-founder of the organisation. Established out of a desire for a more diverse and representative Dutch broadcaster, Omroep Zwart aims to create a sense of belonging and representation of a diverse Dutch society. In an effort to streamline the process of receiving feedback about content creation, they are developing AAVE – the Artificial Intelligence Audience Validation Assistant. It uses a large language model to create digital twins that can then be used for feedback and input for content creators. If a creator wants to create something that involves a certain community, it is important to receive feedback from a member of this community in order to do it justice. By making digital clones or twins of people from certain societal groups, AAVE aims to make this process more accessible by not relying on resource-consuming focus groups. As the project is in its early stages, Omroep Zwart is now collaborating with the AI Media and Democracy Lab to realise this prototype in a responsible manner.

The NEMO Kennislink Challenge: Responsible Recommender Sandbox

To round off the partner presentations, Ruth Visser from NEMO Kennislink reflected on the ongoing recommender system sandbox project in collaboration with us. NEMO Kennislink is the journalistic platform of the NEMO Science Museum that publishes independently about science, technology, and society. With articles, podcasts, and interactive quizzes, they look at real-life problems from various lenses. Nemo Kennislink aims to optimise its readers’ experience and reach a broader audience, especially younger people. In the recommender system sandbox project, they look at different ways to responsibly connect readers with relevant content and recommendations and foster deeper engagement with younger audiences. The sandbox setting allows a controlled, detached environment to experiment with recommender systems in various ways without affecting the network or users. The main challenge they encounter: balancing content diversity with relevance and ensuring fairness and transparency.

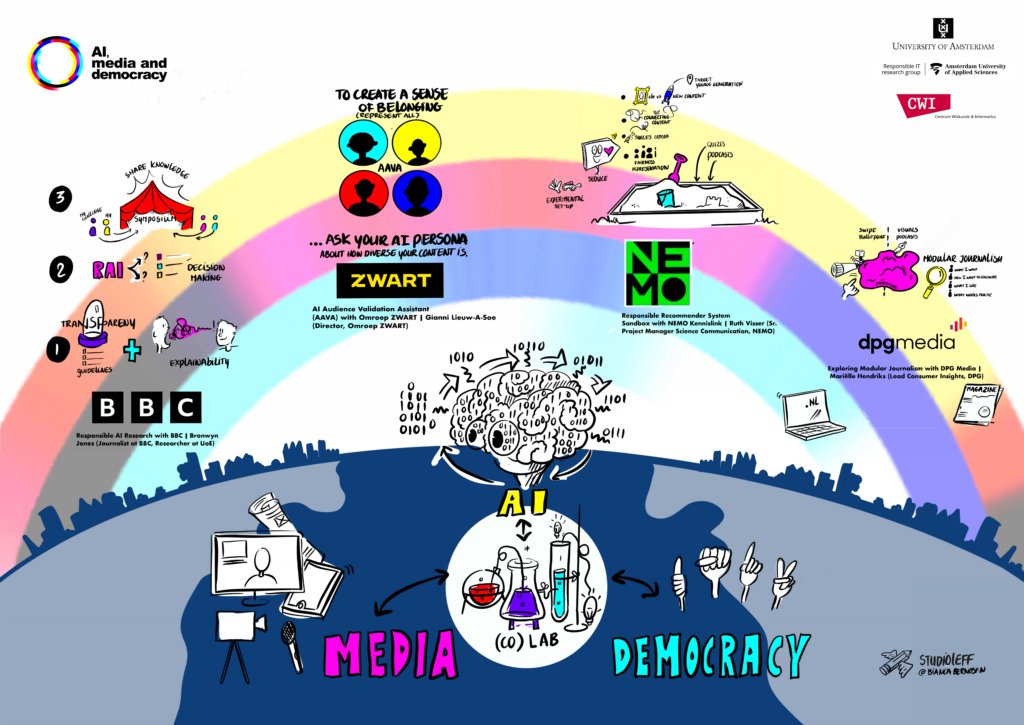

The Research Agenda: A Collaborative Mapping Exercise

After the insightful presentations of our partners, the annual community meeting transitioned into an interactive mapping session with Yuri Westplat from the HvA Responsible IT Lectoraat, and Bianca Berndsen from Studio Leff. As a graphic recorder, Bianca specialises in creating visualisations of organisations and connections. In a collaborative exercise, researchers and partners were invited to map out their topics of expertise, their methodologies, and the challenges they encountered. This to create a comprehensive overview of research topics and angles and highlighted research gaps that can be filled in the future.

The mapping results are set to connect partners to researchers who specialise in a topic they are concerned with, while researchers can find collaborators in making the academic more concrete and tangible. This constant exchange is crucial to upkeep the central values of the AI Media and Democracy Lab: developing responsible tools to encounter AI in the media and strengthen democracy.

We are enthusiastic about deepening our collaborations with partners in the upcoming years and expanding beyond the Dutch context. We look forward to continuing tangible research to further the efforts for a healthy media landscape and democracy.

We would like to thank the speakers and everyone who attended both in-person and online, as well as the IAS team for accommodating us. Finally, we would like to thank the AI Media and Democracy Lab Manager Sara Spaargaren for her organisational efforts.