Written by Hannes Cools, Claes de Vreese, Abdo El Ali, Natali Helberger, Pooja Prajod, Nicolas Mattis, Sophie Morosoli, Laurens Naudts and Teresa Weikmann

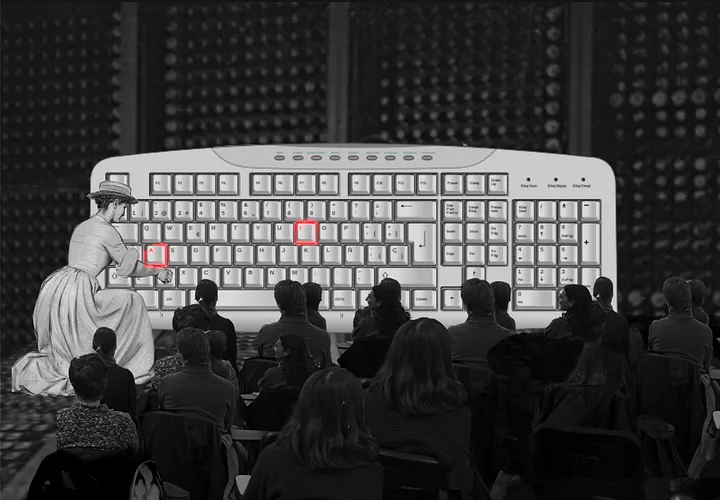

The question of whether and how to disclose the use of AI in news and journalism remains a complex and unresolved issue. While transparency is often seen as a key principle of journalism, the effectiveness of AI disclosures is far from clear. We offer five perspectives from research(-in-progress) on this transparency puzzle.

Read the full Article

The question of whether and how to disclose the use of AI in news and journalism remains a complex and unresolved issue. While transparency is often seen as a key principle of journalism, the effectiveness of AI disclosures is far from clear. Research on disclosure effectiveness shows mixed results and remains inconclusive to a certain extent. Some studies indicate that audiences appreciate transparency, while others show that disclosures may have little impact on trust or may even reduce credibility if users perceive AI-generated content as less reliable. Furthermore, different stakeholders such as news organizations, policymakers, or audiences, may have conflicting expectations.

This raises some fundamental questions:

- What do we know about AI disclosures and the research conducted about them?

- How do AI disclosures in news articles influence audience perceptions of credibility and trust?

- Which types of audiences notice these AI disclosures, which groups does it benefit, and in what ways?

Without clear evidence of their effectiveness, AI disclosures risk becoming symbolic gestures rather than meaningful interventions. Complicating matters further, existing knowledge about AI disclosures in journalism is fragmented across multiple fields, leading to knowledge silos. For example, regulatory frameworks focus on legal obligations and ethical standards, while user experience (UX) research examines how design elements influence reader perception. At the same time, studies on audience preferences explore whether users actually want AI disclosures and how they interpret them.

In short, these often-siloed areas of research often operate independently, leading to gaps in our understanding of AI disclosures. In this article, we want to highlight AI disclosures in news organizations from the different disciplines and expertise present in the AI, Media & Democracy Lab where we work. In doing so, we hope to contribute to solving the inherent complex puzzle that AI disclosures pose for news organizations and society. In addition, we offer some recommendations and considerations for further research.

Insights from News Organizations: Principles & Practices

The introduction of generative AI tools in late 2022 forced newsrooms to rapidly develop charters, principles, and guidelines addressing AI transparency and ethics. Relatedly, one of the central questions that are put forward from the organizational level is:

- How can news organizations implement coherent and consistent AI disclosure practices based on the guidelines they put forward without losing the audience’s trust?

The development of these principles aligns with journalism’s longstanding tradition of self-regulation, where news organizations rely on press councils and internal guidelines rather than government oversight. These AI guidelines across news organizations have shown variability and ambiguity, particularly in the operationalization of core principles like human oversight, transparency, and bias mitigation.

While transparency is a widely endorsed principle, its practical implementation in the form of disclosures varies significantly across organizations. For instance, the Dutch Press Agency (ANP) adheres to a strict human>machine>human production chain, ensuring that humans oversee AI processes at every stage. Similarly, Nucleo, a Brazilian digital-native outlet, asserts that they will never publish AI-generated content without human review nor allow AI to serve as the final editor. Meanwhile, The Guardian emphasizes openness with readers, and CBC follows a “no surprises” rule, ensuring full disclosure whenever AI-generated content is used.

This lack of consistency in AI disclosure policies presents a major challenge for audience engagement. Ambiguous or inconsistent guidelines between outlets make it difficult for news organizations to communicate clearly about their AI use, which could lead to audience confusion and skepticism. This credibility gap underscores the need for a more coherent and standardized approach to AI disclosures in journalism.

Insights from the Audience Perspective: Perceptions & Concerns

Understanding how audiences perceive AI disclosures is crucial to evaluating their effectiveness. One of the central questions from the audience perspective on AI disclosures is:

- How do audiences think about AI use in news and what are their information needs when it comes to disclosing AI use in news?

Group interviews with Dutch audience members have revealed a strong desire for transparency, particularly with an emphasis on what data AI relies on, and how it generally influences the news they consume. Audiences expressed strong concerns about privacy and ethical AI use, wanting to be assured that news organizations are using AI responsibly.

Interestingly, the group interviews revealed a clear paradox: While readers desire absolute transparency, many also trust established news organizations to use AI responsibly without needing to know the specific details on a more granular level. For example, some interviewees argued that a more general statement on the use of AI on a trusted news organization’s website is sufficient, rather than having explanations on the level of every article. When asked about where this trust comes from, explanations reveal that it is often based on the reputation of the news organization rather than a deep understanding of the AI technologies at play.

The challenge for news organizations is to navigate this paradox by implementing disclosures in a more nuanced way, and by understanding better what audiences’ information needs are. For example, it might well be that for less impactful AI tasks, such as spell-checking or translation, detailed disclosures may not be necessary. On the contrary, more significant tasks like generating headlines or writing articles might require more explicit disclosures. By offering different levels of transparency based on the AI’s role in the news production process, news organizations can build trust while addressing readers’ concerns.

Insights from User & Design Research

Encounters with AI-generated content shape not only what audiences consume, but how they interpret the broader relationship between humans and machines in journalism. One of the central questions that arises from user & design research is:

- How to translate evolving transparency obligations into disclosures that are both legally compliant and user-friendly?

Drawing on expertise in human-computer interaction (HCI), communication, law, and design can help in designing disclosures that are meaningful, accessible, and context-sensitive. Last year, workshops on user and design conducted by Abdo El Ali and colleagues brought together professionals from diverse fields to explore the implications of AI disclosure mandates in journalism. A recurring theme was the tension between the need for informative disclosures and the risk of information overload, where users may be overwhelmed with too much detail. Concepts like personalization, user experience, and user cognitive load emerged as critical to understanding how both journalists and audiences engage with AI disclosures.

The findings from the workshops echo concerns in the literature, particularly the concept of contextual integrity in the paper proposed by Nissenbaum — underscoring that effective transparency must balance relevance with simplicity. Design research suggests that not all disclosures should be treated equally. While users want to know if AI played a role in content creation, they are less concerned with technical specifics than with understanding the nature of the exact human-AI collaboration.

Other researchers have highlighted that concise, layered disclosures can reduce cognitive load while preserving informative value. Human-computer interaction research points toward adaptive systems that tailor the depth and timing of disclosures to user preferences or behaviors. Likewise, visual and interactive techniques could help illustrate AI’s contribution to a news story, enabling audiences to grasp how journalistic judgment and machine input coexisted in the final product. These user-centric solutions of a layered form of disclosures could offer a promising path toward intelligent systems capable of providing transparency that informs without alienating users.

Insights from Communication Effect Studies regarding AI Disclosures

The disclosure of AI involvement in news articles has been shown to affect perceptions of credibility and trustworthiness. One of the central questions is therefore:

- How do AI disclosures in news articles influence audience perceptions of credibility and trust, and under what conditions do these effects vary across different audiences?

One of the primary concerns with AI disclosures is whether audiences even notice them. Research indicates that disclosure labels need to be prominently displayed and carefully worded to ensure they are not overlooked or misinterpreted. The design and wording of these labels are crucial, as terms like ‘AI-generated’ are more easily understood than more ambiguous terms like ‘artificial’ or ‘manipulated,’ which could imply that the content is false or unreliable.

Experimental research also shows that, in many cases, AI disclosures lead to negative effects on the perceived credibility and trustworthiness of news. This effect is particularly pronounced when generic labels are used, as audiences may interpret these labels as signaling full automation, even when human oversight is involved. Yet, these effects may not be universal. For instance, the exact impact of AI disclosures may vary depending on individual factors such as whether audiences perceive AI as particularly objective and transparent iii. Thus it is clear that news organizations face a delicate balance between maintaining journalistic transparency and protecting their commercial interests.

Ethical and Legal Perspectives on AI Disclosures

The ethical and legal challenges of AI disclosures are significant. Generative AI technologies have the potential to distort people’s information environments by producing biased, false, or misleading content. One of the central questions is therefore:

- How can the law help citizens and society better understand the informational risks associated with AI-systems and what legal rights and remedies should citizens have to effectively combat these risks?

The European Union’s AI Act attempts to address these concerns by imposing transparency and disclosure obligations on AI providers and deployers. In recent work however, two scholars from the lab critique the EU’s overreliance on transparency as its core empowerment mechanism. For one, the AI Act only obliges that citizens are made aware they interact with AI, nothing more. People are not informed about the risks associated with AI exposure. Second, the AI Act offers little actionable citizen rights that give people control over their informational environment. In fact, where AI is used by media organizations, there might not be any transparency obligation to begin with! While pictures and audio-visual media are placed at heightened scrutiny, no transparency duties in the AI Act apply for textual content over which editorial responsibility and control has been exercised. Finally, labels signaling AI-interaction do not act as truth-labels, they give little indication concerning the reliability of the content consumed: AI-generated news content can be factually correct.

While transparency is important, it does not necessarily guarantee that citizens are informed about the risks associated with AI-generated content. Furthermore, the AI Act does not require transparency for all types of content, particularly in cases where editorial responsibility has been exercised. The legal framework’s failure to address these nuances highlights the need for a more robust approach to AI disclosures that goes beyond mere labelling.

The ongoing ethical and legal debate around AI disclosures must focus on the broader questions of when and why disclosure matters. It is not enough to simply inform citizens that they are interacting with AI; they must also be empowered with the tools to understand and control the information they encounter. This could include the ability for citizens to filter, contest, and report biased or misleading AI-generated content. By providing citizens with more agency, lawmakers can help protect public trust in the media and democratic deliberation and participation.

Ways Forward: Towards Meaningful AI Disclosures in News

As work on AI disclosures continues to evolve, several knowledge gaps remain. There is limited understanding of the long-term impact of AI disclosures on audience trust and perception. Research so far has focused primarily on Western democracies, leaving open questions about the effectiveness of AI disclosures in non-Western media systems and different legal contexts. Additionally, the effectiveness of different transparency strategies, whether through content labels, interactive explanations, or editorial statements, remains unclear. Longitudinal studies are needed to assess how AI disclosures affect audiences over time. Moreover, there are potential unintended side effects of AI disclosures that have not yet been fully explored. For example, how might disclosures influence people’s behaviour or decision-making in the long term? These questions will require ongoing research and reflection as AI continues to play a larger role in journalism.

In conclusion, the question of how to disclose AI in journalism remains complex and multifaceted. It requires balancing transparency, usability, and the practical needs of news organizations. By adopting a more integrated approach that considers user experience, legal and ethical considerations, and audience preferences, news organizations can create more effective AI disclosure practices. As AI continues to shape the future of journalism, it is crucial that transparency not only informs audiences but also builds trust in the media’s ability to use AI responsibly and ethically.

More information on the current projects of the AI, Media, and Democracy lab can be found here.