The Centrum Wiskunde and Informatica (CWI) organized a two-day workshop at Amsterdam Science Park centering on what large-language models (LLMs) can do for media and society, and what impact they may have. The event consisted of presentations, interactive sessions and panel discussions from experts in academia, industry, and the European legislature, addressing questions about language models from various angles.

Foundational models (including large-language models and multi-modal systems) have significantly advanced the opportunities regarding the understanding, analysis, and generation of human language in recent years. These models, based on artificial neural network computations trained on a large scale of documents, open the possibility of performing advanced tasks related to language, audio, video, and image processing. The workshop intended to discuss and advance recent developments regarding LLMs from both a technical and societal point of view.

The first day started with introductory remarks by organisers Davide Ceolin and Ton de Kok, followed by multiple presentations on the topics of large language models in a European context, its effects on societal polarisation and political extremism, and questions of authenticity in journalism.

A contribution that stuck out in particular was Adam Henschke‘s presentation on AI and post-reality politics, titled “Why flooding the political zone with shit matters”. In times when users online drown in an overwhelming information-mix of truth, non-truth, and untruth, AI is a steadily accelerating force to be reckoned with. Adam argues that there is a definite need to curate online information to avoid drowning in it, but the echo chambers and filter bubbles this curation often results in can have problematic effects on politics. Equally as problematic is the approach of “flooding the zone with shit”, a political strategy that deliberately aims to overwhelm the public with information, implemented by Steven Bannon, a strategist to Donald Trump.

Reacting to this is challenging. An immediate response might entail public outrage, but it is critical to understand how this feeds back into how media profits off attention, and the further perpetuation of polarisation and filter bubbles. Another possible reaction would be the dismissal of the concept of shared truths by looking only for information that confirms existing beliefs, which is a driving force behind echo chambers. So is combatting the shit-flood even feasible?

Adam stresses the importance of democracies treating social media as a critical infrastructure and ensuring public safety in it the same way they would on roads, water- or airways. A crucial factor to this is offering education that builds society’s ability to reflect on media to understand when and how media are limited, as well as to build public institutions that are worthy of trust.

Contributions from the AI, Media and Democracy Lab

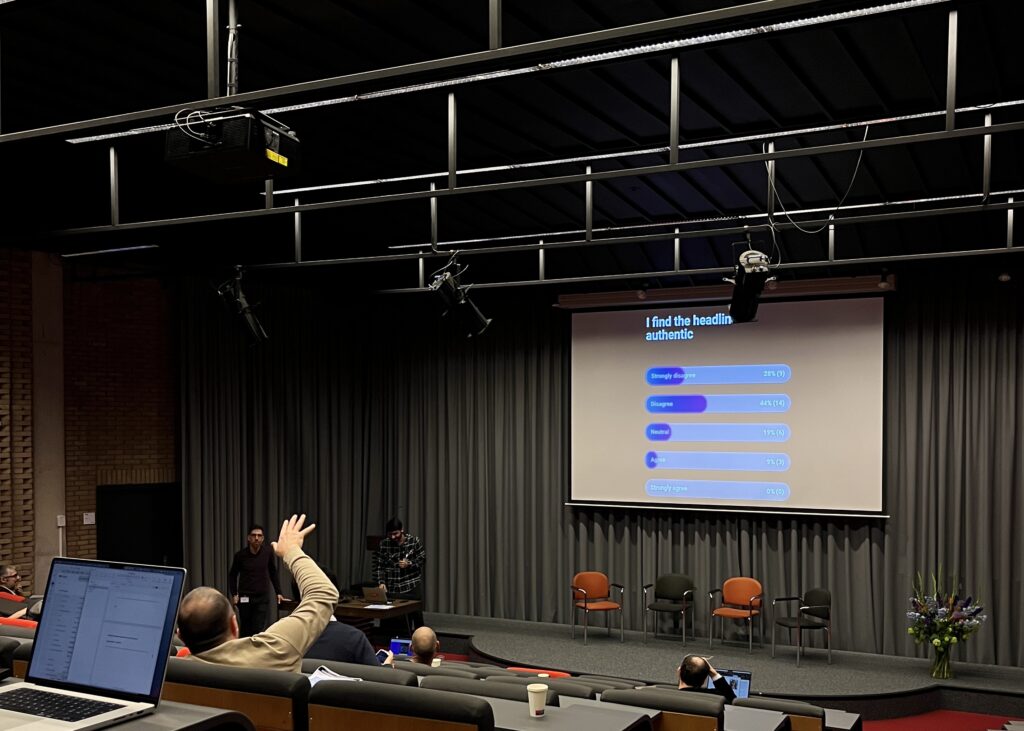

On day two, our researchers Karthikeya Puttur Venkatraj and Abdallah El Ali led an interactive session on AI in journalism. They conducted a live experiment with the workshop attendees on AI detection in journalistic publications. The experiment presented participants with a news piece, including a headline, image, and text, which was then either labelled human or AI. Some of the examples were AI-generated, some were written and composed by humans, but not all were correctly labelled. The experiment asked participants to decide whether they believed the label matched the example, whether they found the headline appealing, and whether they found the headline authentic.

The public polling resulted in engaging discussions between participants and demonstrated that although never entirely unanimously, the majority of attendees were generally able to detect the difference between AI and human-generated journalistic content, despite false labels. Nevertheless, it was also acknowledged that the participant group consisted of AI-experts and those aware of it, suggesting that the experiment would end with different results if conducted with the general population.

Finally, Natali Helberger wrapped up the workshop with a keynote talk questioning the benefits and risks of Chat GPT for democracy, and the role of the AI Act in this matter. Will the European Union protect from big tech? Does legislation proceed too quickly, or not fast enough? And what are we really up against?

Natali argues that it is not enough to talk about AI exclusively as a technology. Rather we should consider it a power, a mechanism for organising society, an inherently political tool. Democracy is about language, and if AI intercepts language, it could potentially destroy our ability to have meaningful conversations. But next to the risks, AI also carries hope to empower and improve the ability to have meaningful conversations.

How does the AI Act play into these dynamics? Does regulation limit the innovation of technology? Natali disputes that assumption. Instead, she argues that ethical and cultural considerations are just as pressing as technical questions in the development of AI. Addressing ethical issues can help innovation, and asking critical questions is a necessary tool to break through big tech and its dominance. It is less about the technology itself but its operationalisation, what visions we have for AI, and what role we want it to play. Natali urges us to pay more attention to systemic risks to democracy and suggests that the real work is yet to begin.

We extend our thanks to the CWI team and Davide Ceolin for coordinating and hosting the workshop.

The workshop is part of the CWI’s semester program on AI’s Impact on Media and Democracy and will be followed by a two-day event that continues to dive deeper into questions of AI in a rapidly developing digital society and its effects on public and private life. The event, taking place on May 27-28, 2024 will host national and international experts in the field and is free to attend. Please register here.